- About

Getting to know us

- Services

- Managed Services

- Application Services

- Cloud Services

- Data Science

- Consulting Services

- Technology Solutions

Managed Services

Application Services

Data Science

Consulting Services

Technology Solutions

- Industries

- Resources

Resources

- Contact

- Contact us

Exploring Autonomous Data Warehouse: Part 3

Contents

Exploring ADW

Part 3 - Using DataPump to Move Data IN and OUT of ADW

In the previous two blogs we used the DBMS_CLOUD procedure to move data into ADW. Here we are going to look at DataPump to move data in and out of the Cloud. Be careful, this one's code-y. I’ve loaded a 20 million record table into ADW using DBMS_CLOUD procedure above. To export that data using DataPump is pretty straight forward:

expdp admin/XXXX@adwoac_high directory=data_pump_dir tables=house_prices dumpfile=exp%U.dmp parallel=16 filesize=1G logfile=export.log

What’s interesting initially is the directory, i.e. it’s the default directory called data_pump_dir. This is a directory which sits on the OS of ADW but you have no physical access to it. Once you’ve created your exports you can use the following commands to look at the directory

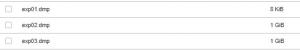

SQL> SELECT * FROM DBMS_CLOUD.LIST_FILES('DATA_PUMP_DIR'); COPY$9_dflt.log 0 dp.log 129 exp01.dmp 8192 exp02.dmp 1073741824 exp03.dmp 1073741824 exp04.dmp 287875072

Now if you want to move that data elsewhere then you use the DBMS_CLOUD package again with the procedure PUT_OBJECT. This allows you to move data from ADW back into Cloud Storage.

BEGIN

DBMS_CLOUD.PUT_OBJECT('ADWOAC','https://objectstorage.uk-london-1.oraclecloud.com/n/XXXXX/b/oacadw/o/exp01.dmp','DATA_PUMP_DIR','exp01.dmp');

END;

/

Now the data is in Cloud storage I’m going to DataPump it back into ADW but instead of using DBMS_CLOUD I will use DataPump and reference the object in Cloud Storage.

I’m using 12.2 DataPump; the procedures vary slightly depending on what you’re using. I’m also using the instant client as well. Ensure you reference the default credential with which you want to access Cloud Storage first in SQLPlus. Once you have done that, use the impdp command.

SQL> alter database property set default_credential = 'admin.adwoac';

The key element of this is dumpfile: note that default_credential is not to be replaced with your default credential, it’s allow impdp to query the DB to verify its default credential, which is admin.adwoac. Then the next part of the line is the exp file location with a wildcard for the file names.

dumpfile=default_credential:https://objectstorage.uk-london-1.oraclecloud.com/n/XXXX/b/oacadw/o/exp%u.dmp

Here is the command in full.

impdp admin/XXXX@adwoac_high directory=data_pump_dir dumpfile=default_credential:https://objectstorage.uk-london-1.oraclecloud.com/n/XXXX/b/oacadw/o/exp%u.dmp REMAP_TABLE=admin:house_prices.admin:house_prices_3 parallel=16 partition_options=merge transform=segment_attributes:n exclude=index, cluster, indextype, materialized_view, materialized_view_log, materialized_zonemap, db_link

So that was some simple of examples of moving data both IN and OUT of ADW. If you want to install the instant client then it’s pretty easy; I just use the RPMs downloaded from Oracle Instance client download page. Key thing to remember is you need the base package and then any additional pieces on top; so for SQL Plus and DataPump I need to install three packages.

https://www.oracle.com/technetwork/topics/linuxx86-64soft-092277.html

yum install oracle-instantclient12.2-basic-12.2.0.1.0-1.x86_64.rpm

yum install oracle-instantclient12.2-sqlplus-12.2.0.1.0-1.x86_64.rpm

yum install oracle-instantclient12.2-tools-12.2.0.1.0-1.x86_64.rpm

Once it’s installed make sure you copy your tnsnames.ora from your ADW wallet into your admin directory of your instance client; which you create. Also ensure you set all your PATHs.

mkdir -p /ur/lib/oracle/12.2/client64/lib/network/admin

export LD_LIBRARY_PATH=/usr/lib/oracle/12.2/client64/lib

export TNS_ADMIN=/usr/lib/oracle/12.2/client64/lib/network/admin

export PATH=$PATH:/usr/lib/oracle/12.2/client64/bin/

Read on for Part 4: Auto-Scaling and Loading Data

.png?width=250&name=stonewater-logo%20(1).png)